Inverse Rendering of Glossy Objects via the Neural Plenoptic Function and Radiance Fields (CVPR2024)

We propose a novel 5D Neural Plenoptic Function (NeP), building on NeRFs and ray tracing for glossy object inverse rendering, including both geometry and material reconstruction.

abstract

Inverse rendering, aiming at recovering both the geometry and materials of objects, provides a more compatible reconstruction to conventional rendering engines compared with the popular neural radiance fields (NeRFs). However, existing NeRF-based inverse rendering methods cannot handle glossy objects with local light interactions well, as these methods typically oversimplify the illumination as a 2D environmental map, which assumes infinite lights only. Observing the superiority of NeRFs in recovering radiance fields, we propose a novel 5D Neural Plenoptic Function (NeP) based on NeRFs and ray tracing, such that more accurate lighting-object interactions can be formulated via the rendering equation. We also design a material-aware cone sampling strategy to efficiently integrate lights inside the BRDF lobes with the assistance of pre-filtered radiance fields. Our method is divided into two stages, the geometry of the target object and the pre-filtered environmental radiance fields are reconstructed in the first stage, and materials of the target object are estimated in the second stage with the proposed NeP and material-aware cone sampling strategy. Extensive experiments on the proposed real-world and synthetic datasets demonstrate that our method can reconstruct both high-fidelity geometry and materials of challenging glossy objects with complex lighting interactions from nearby objects. We will release the code and dataset.

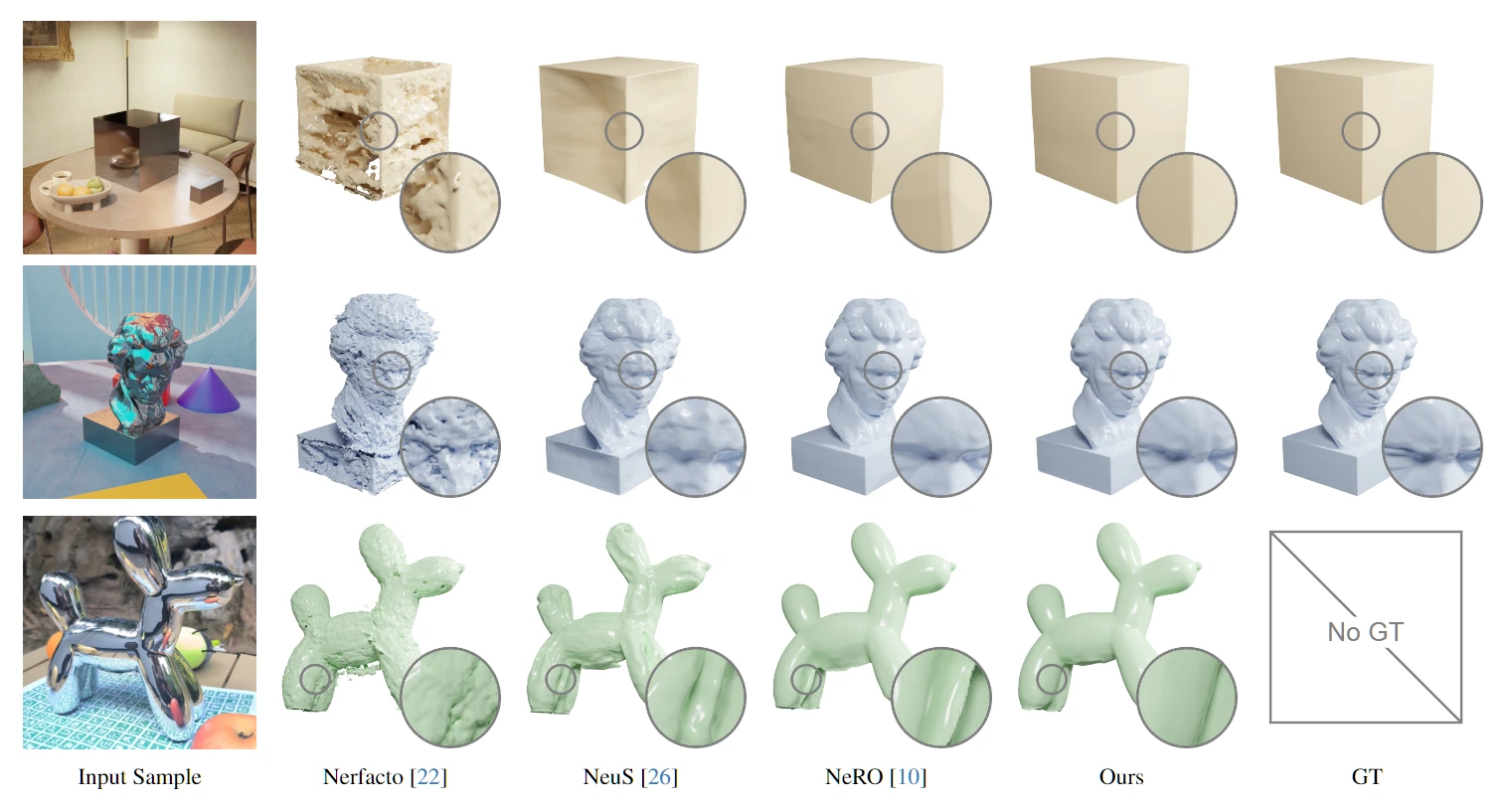

Comparison of the geometry reconstruction among cutting-edge methods and ours.

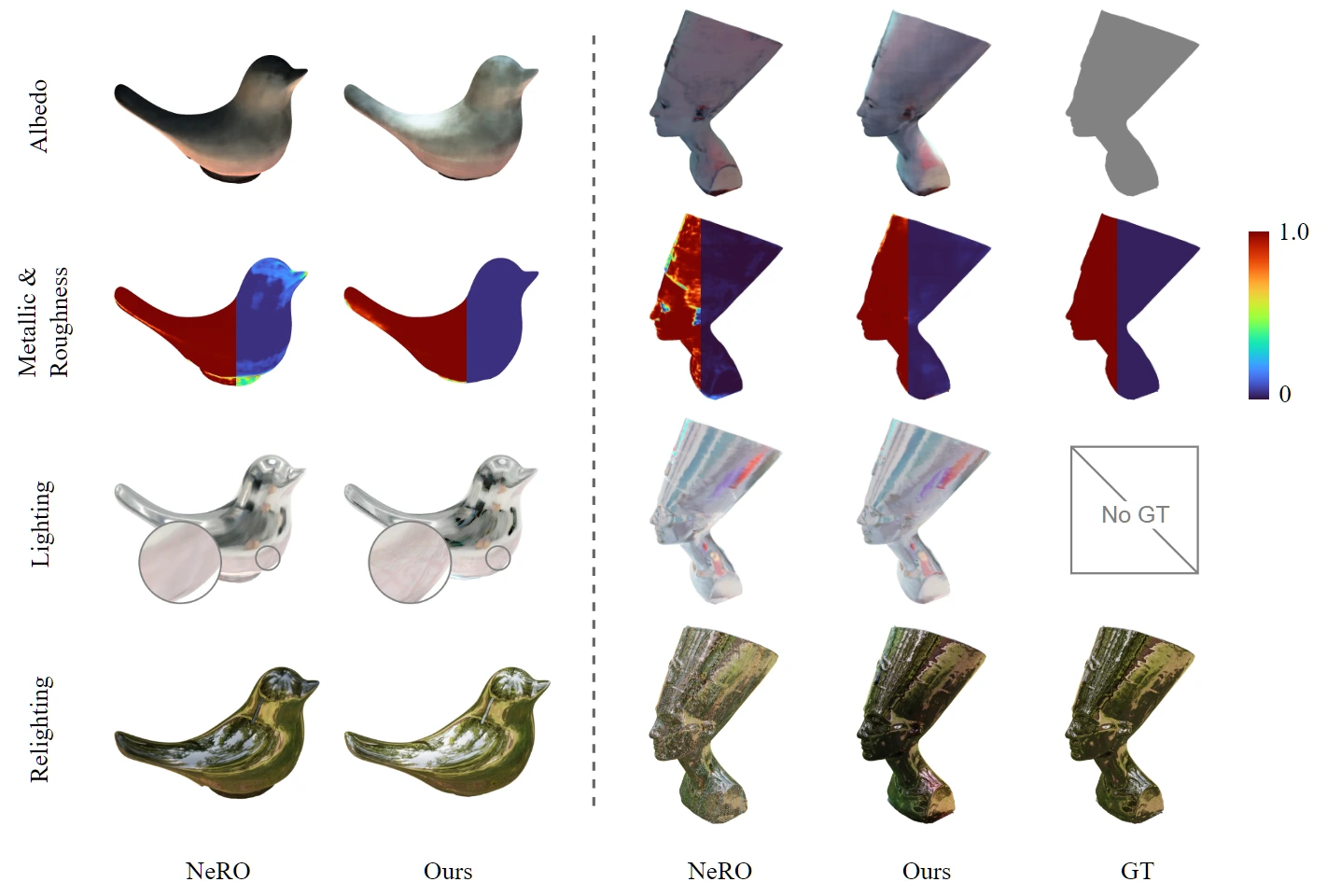

Comparison on the material estimation results

@inproceedings{wang2024nep,

title={Inverse Rendering of Glossy Objects via the Neural Plenoptic Function and Radiance Fields},

author={Haoyuan Wang, Wenbo Hu, Lei Zhu, and Rynson W.H. Lau},

booktitle={CVPR},

year={2024}

}